As AI tools grow more sophisticated, HR professionals face a new threat to hiring integrity—AI-generated applicants. From automated resumes to deepfake interviews, the lines between real and artificial candidates are blurring.

Software Finder surveyed 874 hiring professionals to uncover how prepared teams are to detect and respond to this evolving risk. The results reveal a significant readiness gap and a growing demand for better detection tools.

AI-driven hiring fraud is no longer a theoretical concern. It's already happening, and it's cropping up more often in specific industries.

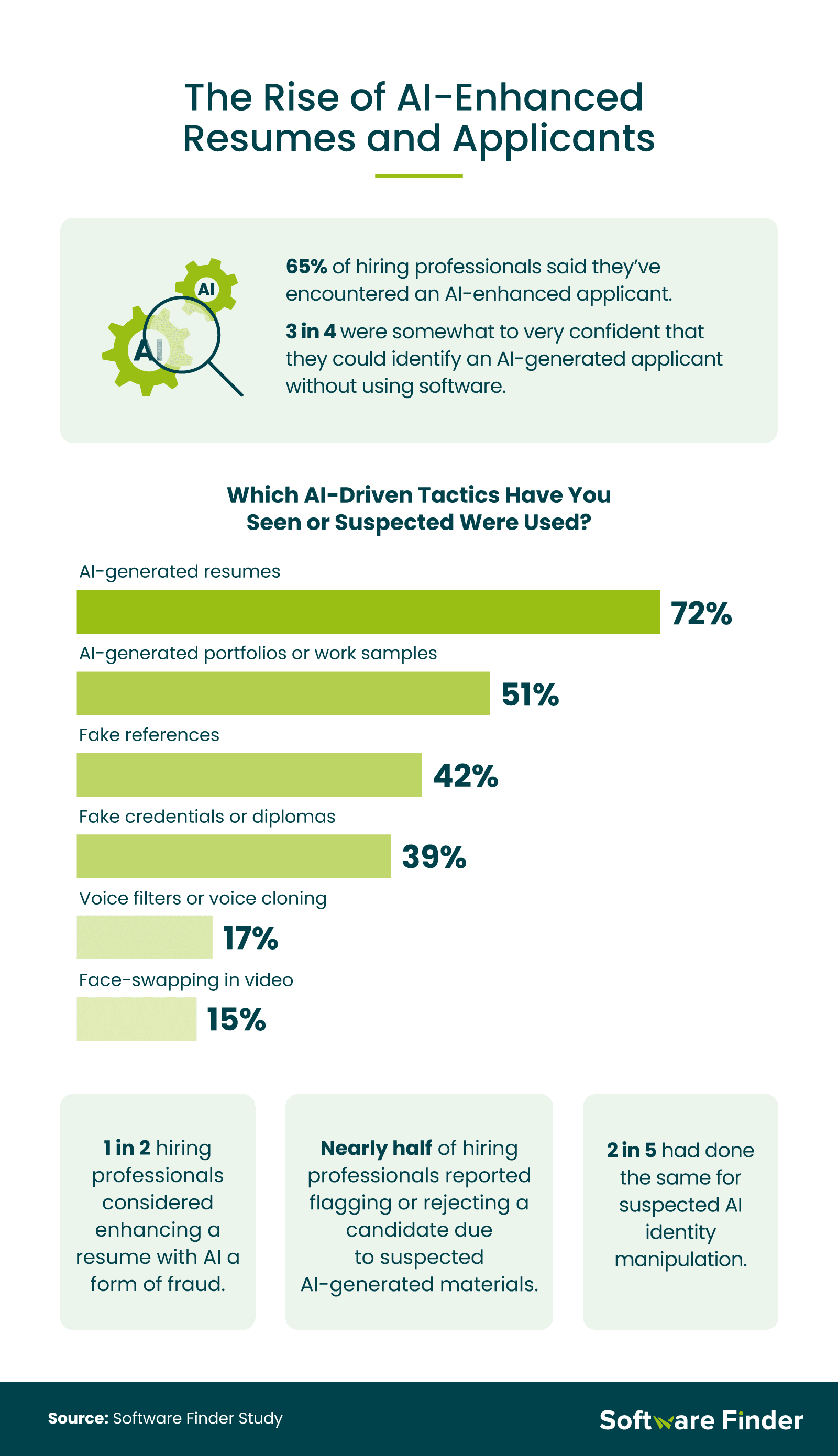

More than 7 in 10 hiring professionals have come across AI-generated resumes during the screening process. This trend signals a significant shift in candidate behavior, where job seekers are using generative tools to refine (and possibly fabricate) their qualifications and experience.

What's even more concerning to recruiters is the emergence of deepfake technology in video interviews. According to 15% of hiring professionals, some applicants have used face-swapping tactics, which manipulate their appearance in real time and raise serious ethical and verification concerns.

Most HR professionals (75%) believed they could identify AI-generated content without the help of detection software. However, this self-assurance wasn't universal, as 20% admitted they don't feel confident spotting AI use without specialized software tools.

The debate around using AI to get hired is heating up. Half of recruiters said they view AI-enhanced resumes as fraudulent and have rejected candidates on suspicion alone. Another 40% had turned down candidates due to suspected identity manipulation, such as AI voice cloning or visual alterations during interviews.

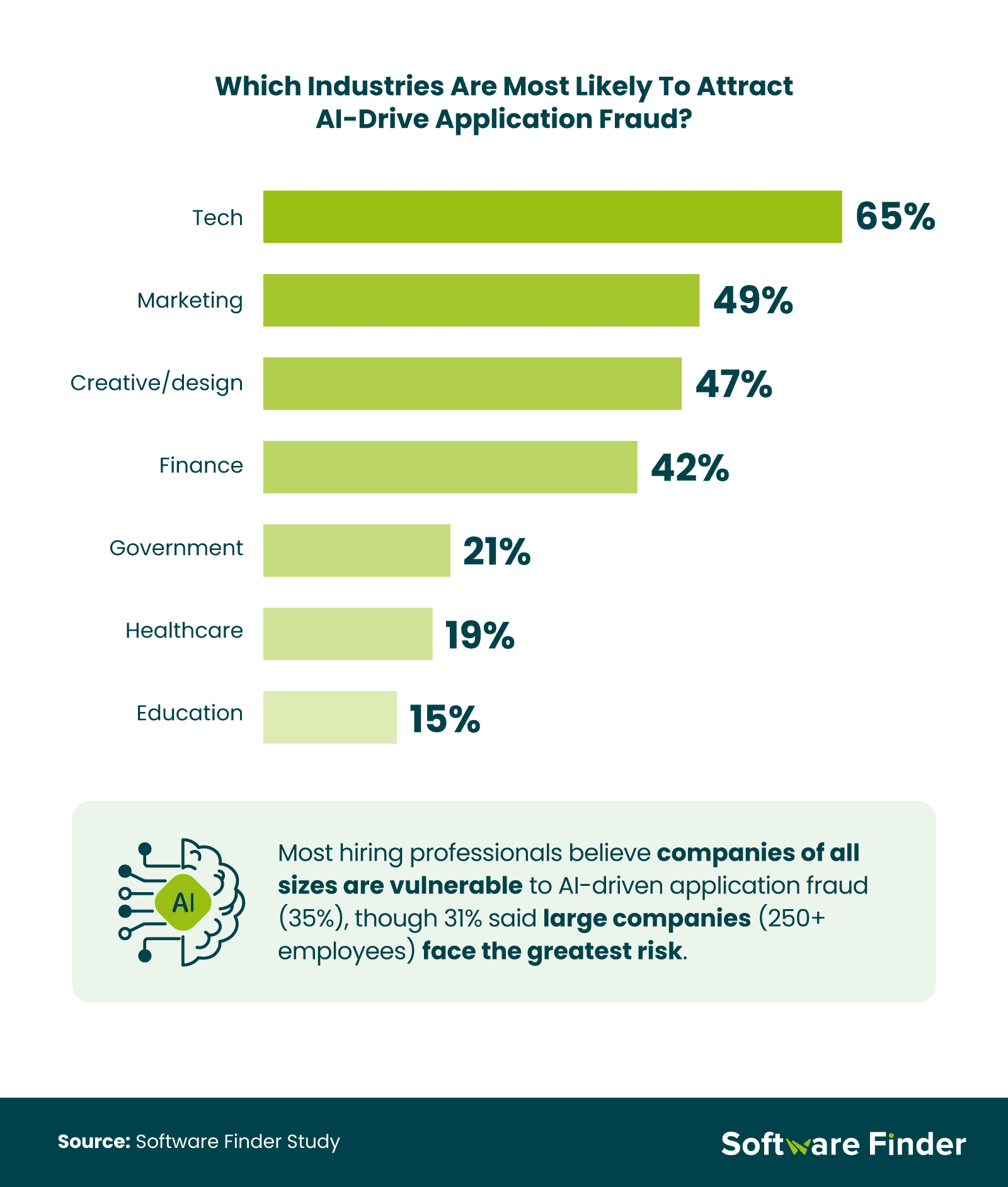

The tech industry topped the list of targets, with 65% of hiring professionals identifying it as the most susceptible to AI-generated job fraud. Marketing and creative/design followed next, reflecting the ease with which people can fabricate portfolios and visual work with AI.

Hiring professionals most often expressed concern that no company is immune, with 35% believing that organizations of all sizes face vulnerability to AI-driven applicant fraud. Yet, nearly one-third singled out large employers with 250 or more employees as especially at risk.

HR teams are beginning to acknowledge their limitations in spotting AI-powered fraud.

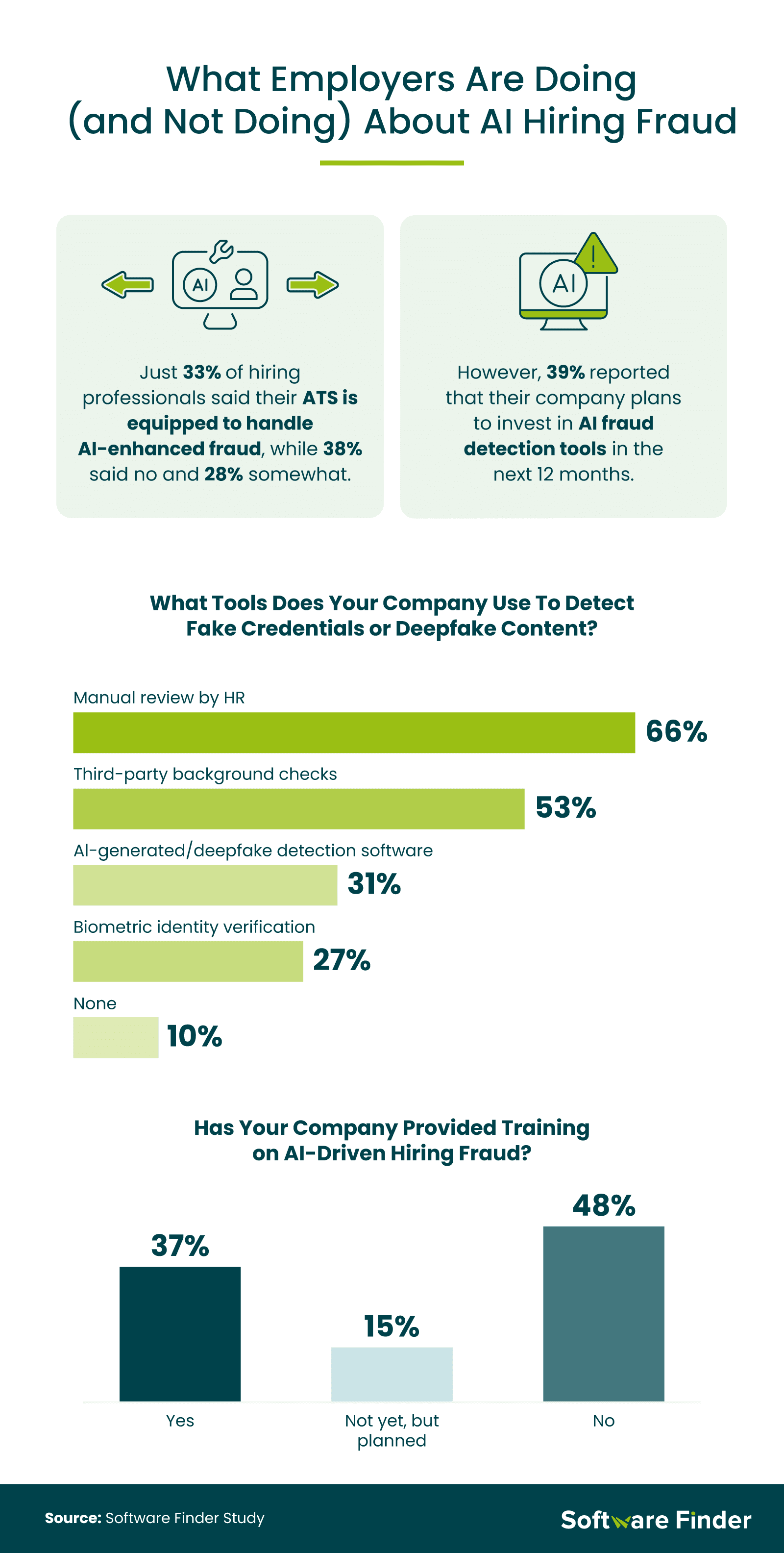

Although many recruiters expressed confidence in detecting AI use, only 31% of companies had implemented AI or deepfake detection software. Hiring professionals were split on whether their existing ATS could effectively handle AI-enhanced applications; 38% said it couldn't, 33% said it could, and 28% weren't sure. Manual HR reviews were still the dominant method used by two-thirds of employers. Slightly more than half (53%) reported using third-party background checks.

Despite limited adoption so far, there is momentum toward stronger defenses. Nearly 40% of respondents said their company plans to invest in detection tools within the next year. However, training remains a weak point: 48% of HR professionals had not received any instruction on AI-driven hiring fraud, although 15% said their company has training plans in the works.

As the threat grows, hiring professionals are calling for new safeguards and regulatory changes.

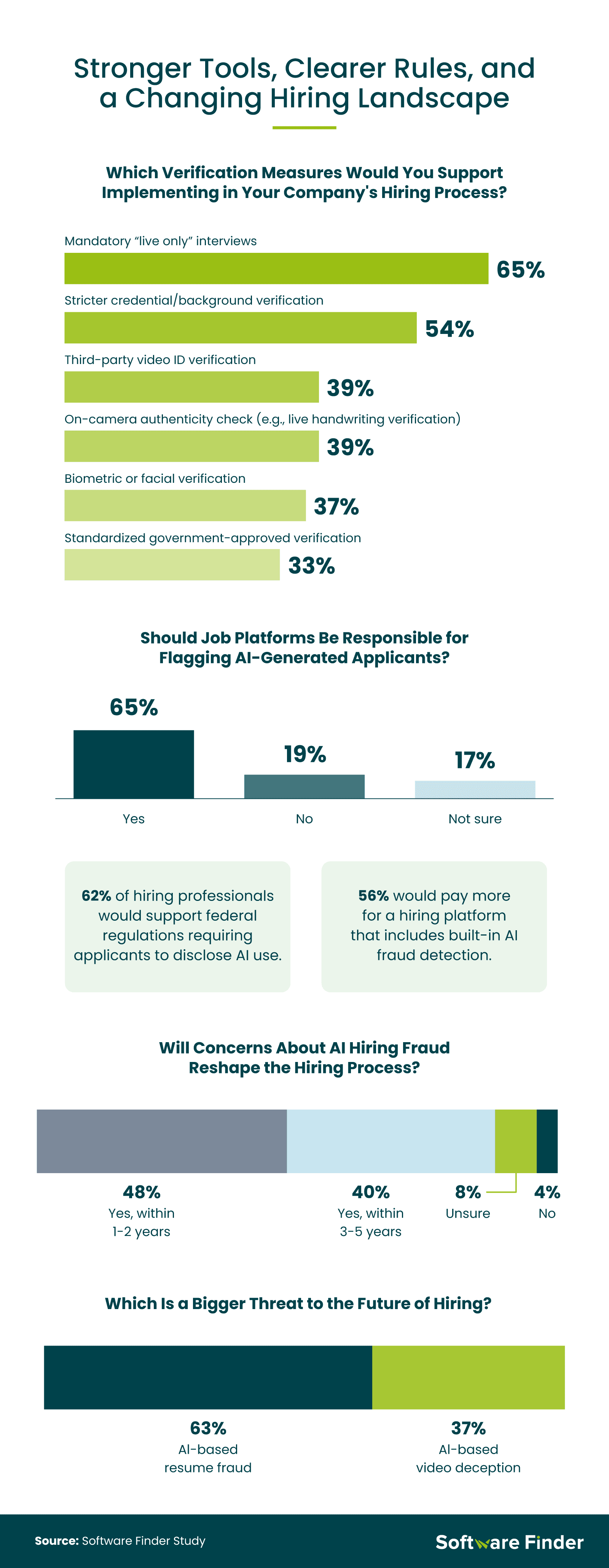

Most respondents (88%) predicted that concerns about AI hiring fraud will fundamentally change the hiring process within five years. Interestingly, AI-based resume manipulation is seen as a greater threat than deepfake video, with 63% of recruiters considering it the bigger risk.

Many recruiters are ready for more stringent verification methods in their company's hiring process. Nearly 7 in 10 said they would support implementing mandatory "live only" interviews to validate candidate identities. Demand is also high for action at the platform level. Almost two-thirds of respondents (65%) believed job sites like LinkedIn and Indeed should be responsible for flagging AI-generated applicants.

There's also strong backing for government regulation. Over 60% of hiring professionals said they would support federal laws requiring job seekers to disclose if they've used AI in their application materials.

AI fraud in hiring is already happening, and while many recruiters feel confident spotting fake applications, the numbers tell a different story. While confidence in spotting fake applicants remains high, the low adoption of detection tools and training suggests that confidence may be misplaced. As the pressure grows to maintain trust in hiring, companies must take proactive steps to protect their processes and people.

If teams want to stay ahead, it's time to move from awareness to action. That means investing in tools that can catch AI-generated content, giving recruiters the training they need, and pushing for stronger safeguards across the platforms we rely on. The hiring process is changing fast, and the sooner we adapt, the better prepared we'll be.

Methodology

We surveyed 874 HR professionals and recruiters to explore how AI and deepfakes are impacting the job market. The average age of respondents was 42; 50% were female, 49% were male, and 1% were nonbinary, and our data was collected in May 2025.

About Software Finder

Software Finder connects businesses with the right software solutions to drive efficiency, security, and growth. Our platform features in-depth comparisons, verified user reviews, and expert insights to help professionals make informed decisions. Whether you're seeking applicant tracking systems or AI detection tools, we can guide you in your search.

Fair Use Statement

If you found these findings valuable, you're welcome to share them for noncommercial use. Please include a link to SoftwareFinder.com for proper attribution.